Linux 应用

Linux 主要作为Linux发行版(通常被称为"distro")的一部分而使用。这些发行版由个人,松散组织的团队,以及商业机构和志愿者组织编写。它们通常包括了其他的系统软件和应用软件,以及一个用来简化系统初始安装的安装工具,和让软件安装升级的集成管理器。大多数系统还包括了像提供GUI界面的XFree86之类的曾经运行于BSD的程序。 一个典型的Linux发行版包括:Linux内核,一些GNU程序库和工具,命令行shell,图形界面的X Window系统和相应的桌面环境,如KDE或GNOME,并包含数千种从办公套件,编译器,文本编辑器到科学工具的应用软件。

- Zookeeper 安装

- FTP(File Transfer Protocol)介绍

- CI 一整套服务

- LDAP 安装和配置

- Kubernets(K8S) 使用

- Hadoop 安装和配置

- Gitlab 安装和配置

- Rinetd:一个用户端口重定向工具

Zookeeper 安装

Docker 部署 Zookeeper

单个实例

- 官网仓库:https://hub.docker.com/r/library/zookeeper/

- 单个实例:

docker run -d --restart always --name one-zookeeper -p 2181:2181 -v /etc/localtime:/etc/localtime zookeeper:latest- 默认端口暴露是:

This image includes EXPOSE 2181 2888 3888 (the zookeeper client port, follower port, election port respectively)

- 默认端口暴露是:

- 容器中的几个重要目录(有需要挂载的可以指定):

/data/datalog/conf

单机多个实例(集群)

- 创建 docker compose 文件:

vim zookeeper.yml - 下面内容来自官网仓库:https://hub.docker.com/r/library/zookeeper/

version: '3.1'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

zoo2:

image: zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=0.0.0.0:2888:3888 server.3=zoo3:2888:3888

zoo3:

image: zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=0.0.0.0:2888:3888

- 启动:

docker-compose -f zookeeper.yml -p zk_test up -d- 参数 -p zk_test 表示这个 compose project 的名字,等价于:

COMPOSE_PROJECT_NAME=zk_test docker-compose -f zookeeper.yml up -d - 不指定项目名称,Docker-Compose 默认以当前文件目录名作为应用的项目名

- 报错是正常情况的。

- 参数 -p zk_test 表示这个 compose project 的名字,等价于:

- 停止:

docker-compose -f zookeeper.yml -p zk_test stop

先安装 nc 再来校验 zookeeper 集群情况

- 环境:CentOS 7.4

- 官网下载:https://nmap.org/download.html,找到 rpm 包

- 当前时间(201803)最新版本下载:

wget https://nmap.org/dist/ncat-7.60-1.x86_64.rpm - 安装:

sudo rpm -i ncat-7.60-1.x86_64.rpm - ln 下:

sudo ln -s /usr/bin/ncat /usr/bin/nc - 检验:

nc --version

校验

- 命令:

echo stat | nc 127.0.0.1 2181,得到如下信息:

Zookeeper version: 3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0, built on 11/01/2017 18:06 GMT

Clients:

/172.21.0.1:58872[0](queued=0,recved=1,sent=0)

Latency min/avg/max: 0/0/0

Received: 1

Sent: 0

Connections: 1

Outstanding: 0

Zxid: 0x100000000

Mode: follower

Node count: 4

- 命令:

echo stat | nc 127.0.0.1 2182,得到如下信息:

Zookeeper version: 3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0, built on 11/01/2017 18:06 GMT

Clients:

/172.21.0.1:36190[0](queued=0,recved=1,sent=0)

Latency min/avg/max: 0/0/0

Received: 1

Sent: 0

Connections: 1

Outstanding: 0

Zxid: 0x500000000

Mode: follower

Node count: 4

- 命令:

echo stat | nc 127.0.0.1 2183,得到如下信息:

Zookeeper version: 3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0, built on 11/01/2017 18:06 GMT

Clients:

/172.21.0.1:33344[0](queued=0,recved=1,sent=0)

Latency min/avg/max: 0/0/0

Received: 1

Sent: 0

Connections: 1

Outstanding: 0

Zxid: 0x500000000

Mode: leader

Node count: 4

多机多个实例(集群)

- 三台机子:

- 内网 ip:

172.24.165.129,外网 ip:47.91.22.116 - 内网 ip:

172.24.165.130,外网 ip:47.91.22.124 - 内网 ip:

172.24.165.131,外网 ip:47.74.6.138

- 内网 ip:

- 修改三台机子 hostname:

- 节点 1:

hostnamectl --static set-hostname youmeekhost1 - 节点 2:

hostnamectl --static set-hostname youmeekhost2 - 节点 3:

hostnamectl --static set-hostname youmeekhost3

- 节点 1:

- 三台机子的 hosts 都修改为如下内容:

vim /etc/hosts

172.24.165.129 youmeekhost1

172.24.165.130 youmeekhost2

172.24.165.131 youmeekhost3

- 节点 1:

docker run -d \

-v /data/docker/zookeeper/data:/data \

-v /data/docker/zookeeper/log:/datalog \

-e ZOO_MY_ID=1 \

-e "ZOO_SERVERS=server.1=youmeekhost1:2888:3888 server.2=youmeekhost2:2888:3888 server.3=youmeekhost3:2888:3888" \

--name=zookeeper1 --net=host --restart=always zookeeper

- 节点 2:

docker run -d \

-v /data/docker/zookeeper/data:/data \

-v /data/docker/zookeeper/log:/datalog \

-e ZOO_MY_ID=2 \

-e "ZOO_SERVERS=server.1=youmeekhost1:2888:3888 server.2=youmeekhost2:2888:3888 server.3=youmeekhost3:2888:3888" \

--name=zookeeper2 --net=host --restart=always zookeeper

- 节点 3:

docker run -d \

-v /data/docker/zookeeper/data:/data \

-v /data/docker/zookeeper/log:/datalog \

-e ZOO_MY_ID=3 \

-e "ZOO_SERVERS=server.1=youmeekhost1:2888:3888 server.2=youmeekhost2:2888:3888 server.3=youmeekhost3:2888:3888" \

--name=zookeeper3 --net=host --restart=always zookeeper

需要环境

- JDK 安装

下载安装

- 官网:https://zookeeper.apache.org/

- 此时(201702)最新稳定版本:Release

3.4.9 - 官网下载:http://www.apache.org/dyn/closer.cgi/zookeeper/

- 我这里以:

zookeeper-3.4.8.tar.gz为例 - 安装过程:

mkdir -p /usr/program/zookeeper/datacd /opt/setupstar zxvf zookeeper-3.4.8.tar.gzmv /opt/setups/zookeeper-3.4.8 /usr/program/zookeepercd /usr/program/zookeeper/zookeeper-3.4.8/confmv zoo_sample.cfg zoo.cfgvim zoo.cfg

- 将配置文件中的这个值:

- 原值:

dataDir=/tmp/zookeeper - 改为:

dataDir=/usr/program/zookeeper/data

- 原值:

- 防火墙开放2181端口

iptables -A INPUT -p tcp -m tcp --dport 2181 -j ACCEPTservice iptables saveservice iptables restart

- 启动 zookeeper:

sh /usr/program/zookeeper/zookeeper-3.4.8/bin/zkServer.sh start - 停止 zookeeper:

sh /usr/program/zookeeper/zookeeper-3.4.8/bin/zkServer.sh stop - 查看 zookeeper 状态:

sh /usr/program/zookeeper/zookeeper-3.4.8/bin/zkServer.sh status- 如果是集群环境,下面几种角色

- leader

- follower

- 如果是集群环境,下面几种角色

集群环境搭建

确定机子环境

- 集群环境最少节点是:3,且节点数必须是奇数,生产环境推荐是:5 个机子节点。

- 系统都是 CentOS 6

- 机子 1:192.168.1.121

- 机子 2:192.168.1.111

- 机子 3:192.168.1.112

配置

- 在三台机子上都做如上文的流程安装,再补充修改配置文件:

vim /usr/program/zookeeper/zookeeper-3.4.8/conf/zoo.cfg - 三台机子都增加下面内容:

server.1=192.168.1.121:2888:3888

server.2=192.168.1.111:2888:3888

server.3=192.168.1.112:2888:3888

- 在 机子 1 增加一个该文件:

vim /usr/program/zookeeper/data/myid,文件内容填写:1 - 在 机子 2 增加一个该文件:

vim /usr/program/zookeeper/data/myid,文件内容填写:2 - 在 机子 3 增加一个该文件:

vim /usr/program/zookeeper/data/myid,文件内容填写:3 - 然后在三台机子上都启动 zookeeper:

sh /usr/program/zookeeper/zookeeper-3.4.8/bin/zkServer.sh start - 分别查看三台机子的状态:

sh /usr/program/zookeeper/zookeeper-3.4.8/bin/zkServer.sh status,应该会得到类似这样的结果:

Using config: /usr/program/zookeeper/zookeeper-3.4.8/bin/../conf/zoo.cfg

Mode: follower 或者 Mode: leader

Zookeeper 客户端工具

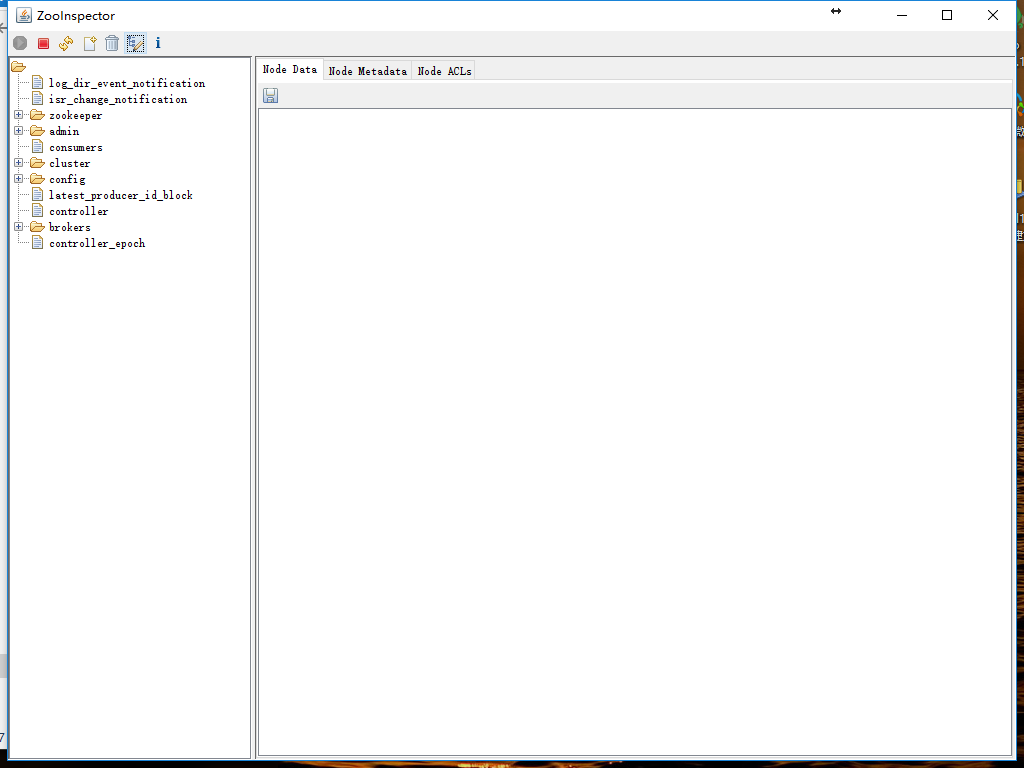

ZooInspector

- 下载地址:https://issues.apache.org/jira/secure/attachment/12436620/ZooInspector.zip

- 解压,双击 jar 文件,效果如下:

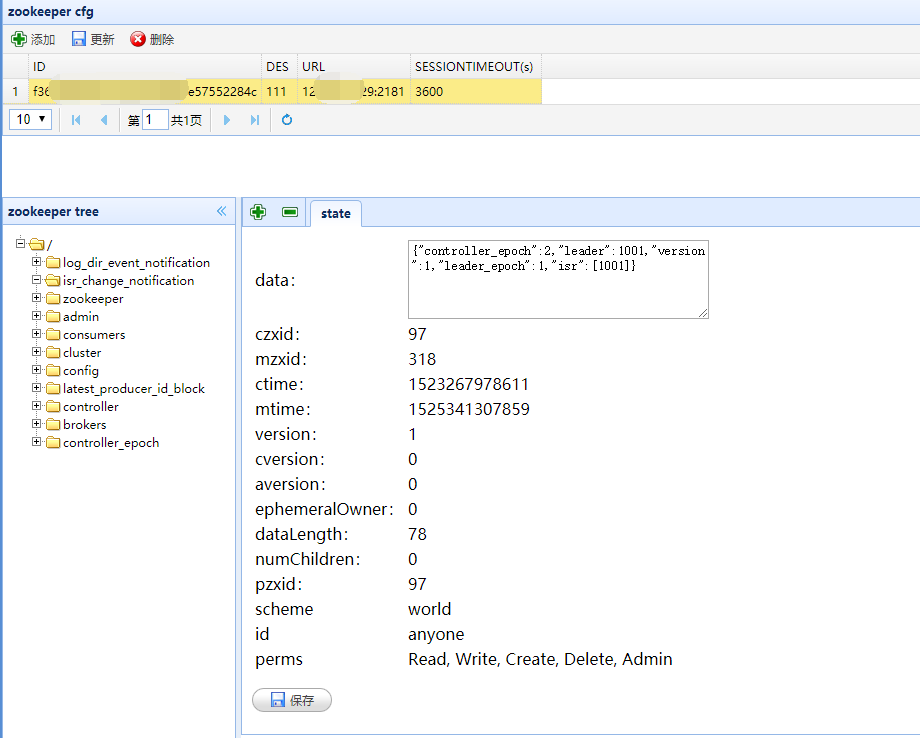

zooweb

- 下载地址:https://github.com/zhuhongyu345/zooweb

- Spring Boot 的 Web 项目,直接:

java -jar zooweb-1.0.jar启动 web 服务,然后访问:http://127.0.0.1:9345

资料

FTP(File Transfer Protocol)介绍

FTP 安装

-

查看是否已安装:

-

CentOS:

rpm -qa | grep vsftpd -

Ubuntu:

dpkg -l | grep vsftpd -

安装:

-

CentOS 6:

sudo yum install -y vsftpd -

Ubuntu:

sudo apt-get install -y vsftpd

FTP 使用之前要点

- 关闭 CentOS 上的 SELinux 组件(Ubuntu 体系是没有这东西的)。

- 查看 SELinux 开启状态:

sudo getenforce- 有如下三种状态,默认是 Enforcing

- Enforcing(开启)

- Permissive(开启,但是只起到警告作用,属于比较轻的开启)

- Disabled(关闭)

- 临时关闭:

- 命令:

sudo setenforce 0

- 命令:

- 临时开启:

- 命令:

sudo setenforce 1

- 命令:

- 永久关闭:

- 命令:

sudo vim /etc/selinux/config - 将:

SELINUX=enforcing改为SELINUX=disbaled,配置好之后需要重启系统。

- 命令:

- 有如下三种状态,默认是 Enforcing

FTP 服务器配置文件常用参数

- vsftpd 默认是支持使用 Linux 系统里的账号进行登录的(登录上去可以看到自己的 home 目录内容),权限跟 Linux 的账号权限一样。但是建议使用软件提供的虚拟账号管理体系功能,用虚拟账号登录。

- 配置文件介绍(记得先备份):

sudo vim /etc/vsftpd/vsftpd.conf,比较旧的系统版本是:vim /etc/vsftpd.conf - 该配置主要参数解释:

- anonymous_enable=NO #不允许匿名访问,改为YES即表示可以匿名登录

- anon_upload_enable=YES #是否允许匿名用户上传

- anon_mkdir_write_enable=YES #是否允许匿名用户创建目录

- local_enable=YES #是否允许本地用户,也就是linux系统的已有账号,如果你要FTP的虚拟账号,那可以改为NO

- write_enable=YES #是否允许本地用户具有写权限

- local_umask=022 #本地用户掩码

- chroot_list_enable=YES #不锁定用户在自己的家目录,默认是注释,建议这个一定要开,比如本地用户judasn,我们只能看到/home/judasn,没办法看到/home目录

- chroot_list_file=/etc/vsftpd/chroot_list #该选项是配合上面选项使用的。此文件中的用户将启用 chroot,如果上面的功能开启是不够的还要把用户名加到这个文件里面。配置好后,登录的用户,默认登录上去看到的根目录就是自己的home目录。

- listen=YES #独立模式

- userlist_enable=YES #用户访问控制,如果是YES,则表示启用vsftp的虚拟账号功能,虚拟账号配置文件是/etc/vsftpd/user_list

- userlist_deny=NO #这个属性在配置文件是没有的,当userlist_enable=YES,这个值也为YES,则user_list文件中的用户不能登录FTP,列表外的用户可以登录,也可以起到一个黑名单的作用。当userlist_enable=YES,这个值为NO,则user_list文件中的用户能登录FTP,列表外的用户不可以登录,也可以起到一个白名单的作用。如果同一个用户即在白名单中又在ftpusers黑名单文件中,那还是会以黑名单为前提,对应账号没法登录。

- tcp_wrappers=YES #是否启用TCPWrappers管理服务

- FTP用户黑名单配置文件:

sudo vim /etc/vsftpd/ftpusers,默认root用户也在黑名单中 - 控制FTP用户登录配置文件:

sudo vim /etc/vsftpd/user_list - 启动服务:

service vsftpd restart

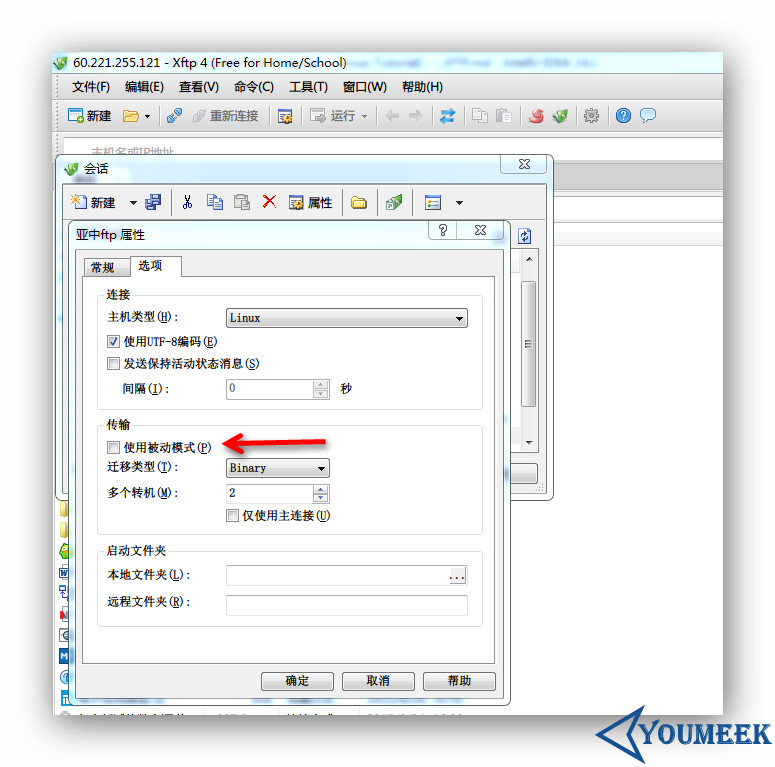

vsftpd 的两种传输模式

- 分为:主动模式(PORT)和被动模式(PASV)。这两个模式会涉及到一些端口问题,也就涉及到防火墙问题,所以要特别注意。主动模式比较简单,只要在防火墙上放开放开 21 和 20 端口即可。被动模式则要根据情况放开一个端口段。

- 上图箭头:xftp 新建连接默认都是勾选被动模式的,所以如果要使用主动模式,在该连接的属性中是要去掉勾选。

vsftpd 的两种运行模式

- 分为:xinetd 模式和 standalone 模式

- xinetd 模式:由 xinetd 作为 FTP 的守护进程,负责 21 端口的监听,一旦外部发起对 21 端口的连接,则调用 FTP 的主程序处理,连接完成后,则关闭 FTP 主程序,释放内存资源。好处是资源占用少,适合 FTP 连接数较少的场合。

- standalone 模式:直接使用 FTP 主程序作为 FTP 的守护进程,负责 21 端口的监听,由于无需经过 xinetd 的前端代理,响应速度快,适合连接数 较大的情况,但由于 FTP 主程序长期驻留内存,故较耗资源。

- standalone 一次性启动,运行期间一直驻留在内存中,优点是对接入信号反应快,缺点是损耗了一定的系统资源,因此经常应用于对实时反应要求较高的 专业 FTP 服务器。

- xinetd 恰恰相反,由于只在外部连接发送请求时才调用 FTP 进程,因此不适合应用在同时连接数量较多的系统。此外,xinetd 模式不占用系统资源。除了反应速度和占用资源两方面的影响外,vsftpd 还提供了一些额外的高级功能,如 xinetd 模式支持 per_IP (单一 IP)限制,而 standalone 模式则更有利于 PAM 验证功能的应用。

- 配置 xinetd 模式:

- 编辑配置文件:

sudo vim /etc/xinetd.d/vsftpd - 属性信息改为如下信息:

- disable = no

- socket_type = stream

- wait = no #这表示设备是激活的,它正在使用标准的TCP Sockets

- 编辑配置文件:

sudo vim /etc/vsftpd/vsftpd.conf - 如果该配置选项中的有

listen=YES,则要注释掉

- 编辑配置文件:

- 重启 xinetd 服务,命令:

sudo /etc/rc.d/init.d/xinetd restart - 配置 standalone 模式:

- 编辑配置文件:

sudo vim /etc/xinetd.d/vsftpd - 属性信息改为如下信息:

- disable = yes

- 编辑配置文件:

sudo vim /etc/vsftpd/vsftpd.conf - 属性信息改为如下信息:

- Listen=YES(如果是注释掉则要打开注释)

- 编辑配置文件:

- 重启服务:

sudo service vsftpd restart

- 配置 xinetd 模式:

FTP 资料

- http://www.jikexueyuan.com/course/994.html

- http://www.while0.com/36.html

- http://www.cnblogs.com/CSGrandeur/p/3754126.html

- http://www.centoscn.com/image-text/config/2015/0613/5651.html

- http://wiki.ubuntu.org.cn/Vsftpd

CI 一整套服务

环境说明

- CentOS 7.7

- 两台机子(一台机子也是可以,内存至少要 8G)

- 一台:Gitlab + Redis + Postgresql

- 硬件推荐:内存 4G

- 端口安排

- Gitlab:10080

- 一台:Nexus + Jenkins + SonarQube + Postgresql

- 硬件推荐:内存 8G

- 端口安排

- SonarQube:19000

- Nexus:18081

- Jenkins:18080

- 一台:Gitlab + Redis + Postgresql

Gitlab + Redis + Postgresql

- 预计会使用内存:2G 左右

- 这套方案来自(部分内容根据自己情况进行了修改):https://github.com/sameersbn/docker-gitlab

- 创建宿主机挂载目录:

mkdir -p /data/docker/gitlab/gitlab /data/docker/gitlab/redis /data/docker/gitlab/postgresql - 赋权(避免挂载的时候,一些程序需要容器中的用户的特定权限使用):

chmod -R 777 /data/docker/gitlab/gitlab /data/docker/gitlab/redis /data/docker/gitlab/postgresql - 这里使用 docker-compose 的启动方式,所以需要创建 docker-compose.yml 文件:

version: '2'

services:

redis:

restart: always

image: sameersbn/redis:latest

command:

- --loglevel warning

volumes:

- /data/docker/gitlab/redis:/var/lib/redis:Z

postgresql:

restart: always

image: sameersbn/postgresql:9.6-2

volumes:

- /data/docker/gitlab/postgresql:/var/lib/postgresql:Z

environment:

- DB_USER=gitlab

- DB_PASS=password

- DB_NAME=gitlabhq_production

- DB_EXTENSION=pg_trgm

gitlab:

restart: always

image: sameersbn/gitlab:10.4.2-1

depends_on:

- redis

- postgresql

ports:

- "10080:80"

- "10022:22"

volumes:

- /data/docker/gitlab/gitlab:/home/git/data:Z

environment:

- DEBUG=false

- DB_ADAPTER=postgresql

- DB_HOST=postgresql

- DB_PORT=5432

- DB_USER=gitlab

- DB_PASS=password

- DB_NAME=gitlabhq_production

- REDIS_HOST=redis

- REDIS_PORT=6379

- TZ=Asia/Shanghai

- GITLAB_TIMEZONE=Beijing

- GITLAB_HTTPS=false

- SSL_SELF_SIGNED=false

- GITLAB_HOST=192.168.0.105

- GITLAB_PORT=10080

- GITLAB_SSH_PORT=10022

- GITLAB_RELATIVE_URL_ROOT=

- GITLAB_SECRETS_DB_KEY_BASE=long-and-random-alphanumeric-string

- GITLAB_SECRETS_SECRET_KEY_BASE=long-and-random-alphanumeric-string

- GITLAB_SECRETS_OTP_KEY_BASE=long-and-random-alphanumeric-string

- GITLAB_ROOT_PASSWORD=

- GITLAB_ROOT_EMAIL=

- GITLAB_NOTIFY_ON_BROKEN_BUILDS=true

- GITLAB_NOTIFY_PUSHER=false

- GITLAB_EMAIL=notifications@example.com

- GITLAB_EMAIL_REPLY_TO=noreply@example.com

- GITLAB_INCOMING_EMAIL_ADDRESS=reply@example.com

- GITLAB_BACKUP_SCHEDULE=daily

- GITLAB_BACKUP_TIME=01:00

- SMTP_ENABLED=false

- SMTP_DOMAIN=www.example.com

- SMTP_HOST=smtp.gmail.com

- SMTP_PORT=587

- SMTP_USER=mailer@example.com

- SMTP_PASS=password

- SMTP_STARTTLS=true

- SMTP_AUTHENTICATION=login

- IMAP_ENABLED=false

- IMAP_HOST=imap.gmail.com

- IMAP_PORT=993

- IMAP_USER=mailer@example.com

- IMAP_PASS=password

- IMAP_SSL=true

- IMAP_STARTTLS=false

- OAUTH_ENABLED=false

- OAUTH_AUTO_SIGN_IN_WITH_PROVIDER=

- OAUTH_ALLOW_SSO=

- OAUTH_BLOCK_AUTO_CREATED_USERS=true

- OAUTH_AUTO_LINK_LDAP_USER=false

- OAUTH_AUTO_LINK_SAML_USER=false

- OAUTH_EXTERNAL_PROVIDERS=

- OAUTH_CAS3_LABEL=cas3

- OAUTH_CAS3_SERVER=

- OAUTH_CAS3_DISABLE_SSL_VERIFICATION=false

- OAUTH_CAS3_LOGIN_URL=/cas/login

- OAUTH_CAS3_VALIDATE_URL=/cas/p3/serviceValidate

- OAUTH_CAS3_LOGOUT_URL=/cas/logout

- OAUTH_GOOGLE_API_KEY=

- OAUTH_GOOGLE_APP_SECRET=

- OAUTH_GOOGLE_RESTRICT_DOMAIN=

- OAUTH_FACEBOOK_API_KEY=

- OAUTH_FACEBOOK_APP_SECRET=

- OAUTH_TWITTER_API_KEY=

- OAUTH_TWITTER_APP_SECRET=

- OAUTH_GITHUB_API_KEY=

- OAUTH_GITHUB_APP_SECRET=

- OAUTH_GITHUB_URL=

- OAUTH_GITHUB_VERIFY_SSL=

- OAUTH_GITLAB_API_KEY=

- OAUTH_GITLAB_APP_SECRET=

- OAUTH_BITBUCKET_API_KEY=

- OAUTH_BITBUCKET_APP_SECRET=

- OAUTH_SAML_ASSERTION_CONSUMER_SERVICE_URL=

- OAUTH_SAML_IDP_CERT_FINGERPRINT=

- OAUTH_SAML_IDP_SSO_TARGET_URL=

- OAUTH_SAML_ISSUER=

- OAUTH_SAML_LABEL="Our SAML Provider"

- OAUTH_SAML_NAME_IDENTIFIER_FORMAT=urn:oasis:names:tc:SAML:2.0:nameid-format:transient

- OAUTH_SAML_GROUPS_ATTRIBUTE=

- OAUTH_SAML_EXTERNAL_GROUPS=

- OAUTH_SAML_ATTRIBUTE_STATEMENTS_EMAIL=

- OAUTH_SAML_ATTRIBUTE_STATEMENTS_NAME=

- OAUTH_SAML_ATTRIBUTE_STATEMENTS_FIRST_NAME=

- OAUTH_SAML_ATTRIBUTE_STATEMENTS_LAST_NAME=

- OAUTH_CROWD_SERVER_URL=

- OAUTH_CROWD_APP_NAME=

- OAUTH_CROWD_APP_PASSWORD=

- OAUTH_AUTH0_CLIENT_ID=

- OAUTH_AUTH0_CLIENT_SECRET=

- OAUTH_AUTH0_DOMAIN=

- OAUTH_AZURE_API_KEY=

- OAUTH_AZURE_API_SECRET=

- OAUTH_AZURE_TENANT_ID=

- 启动:

docker-compose up -d,启动比较慢,等个 2 分钟左右。 - 浏览器访问 Gitlab:http://192.168.0.105:10080/users/sign_in

- 默认用户是 root,密码首次访问必须重新设置,并且最小长度为 8 位,我习惯设置为:aa123456

- 添加 SSH key:http://192.168.0.105:10080/profile/keys

- Gitlab 的具体使用可以看另外文章:Gitlab 的使用

Nexus + Jenkins + SonarQube

- 预计会使用内存:4G 左右

- 创建宿主机挂载目录:

mkdir -p /data/docker/ci/nexus /data/docker/ci/jenkins /data/docker/ci/jenkins/lib /data/docker/ci/jenkins/home /data/docker/ci/sonarqube /data/docker/ci/postgresql /data/docker/ci/gatling/results - 赋权(避免挂载的时候,一些程序需要容器中的用户的特定权限使用):

chmod -R 777 /data/docker/ci/nexus /data/docker/ci/jenkins/lib /data/docker/ci/jenkins/home /data/docker/ci/sonarqube /data/docker/ci/postgresql /data/docker/ci/gatling/results - 下面有一个细节要特别注意:yml 里面不能有中文。还有就是 sonar 的挂载目录不能直接挂在 /opt/sonarqube 上,不然会启动不了。

- 这里使用 docker-compose 的启动方式,所以需要创建 docker-compose.yml 文件:

version: '3'

networks:

prodnetwork:

driver: bridge

services:

sonardb:

image: postgres:9.6.6

restart: always

ports:

- "5433:5432"

networks:

- prodnetwork

volumes:

- /data/docker/ci/postgresql:/var/lib/postgresql

environment:

- POSTGRES_USER=sonar

- POSTGRES_PASSWORD=sonar

sonar:

image: sonarqube:6.7.1

restart: always

ports:

- "19000:9000"

- "19092:9092"

networks:

- prodnetwork

depends_on:

- sonardb

volumes:

- /data/docker/ci/sonarqube/conf:/opt/sonarqube/conf

- /data/docker/ci/sonarqube/data:/opt/sonarqube/data

- /data/docker/ci/sonarqube/extension:/opt/sonarqube/extensions

- /data/docker/ci/sonarqube/bundled-plugins:/opt/sonarqube/lib/bundled-plugins

environment:

- SONARQUBE_JDBC_URL=jdbc:postgresql://sonardb:5432/sonar

- SONARQUBE_JDBC_USERNAME=sonar

- SONARQUBE_JDBC_PASSWORD=sonar

nexus:

image: sonatype/nexus3

restart: always

ports:

- "18081:8081"

networks:

- prodnetwork

volumes:

- /data/docker/ci/nexus:/nexus-data

jenkins:

image: wine6823/jenkins:1.1

restart: always

ports:

- "18080:8080"

networks:

- prodnetwork

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /usr/bin/docker:/usr/bin/docker

- /etc/localtime:/etc/localtime:ro

- $HOME/.ssh:/root/.ssh

- /data/docker/ci/jenkins/lib:/var/lib/jenkins/

- /data/docker/ci/jenkins/home:/var/jenkins_home

depends_on:

- nexus

- sonar

environment:

- NEXUS_PORT=8081

- SONAR_PORT=9000

- SONAR_DB_PORT=5432

- 启动:

docker-compose up -d,启动比较慢,等个 2 分钟左右。 - 浏览器访问 SonarQube:http://192.168.0.105:19000

- 默认用户名:admin

- 默认密码:admin

- 插件安装地址:http://192.168.0.105:19000/admin/marketplace

- 代码检查规范选择:http://192.168.0.105:19000/profiles

- 如果没有安装相关检查插件,默认是没有内容可以设置,建议现在插件市场安装 FindBugs,这样也可以帮你生成插件目录:

/extension/plugins - 如果你有其他额外插件,可以把 jar 放在

${SONAR_HOME}/extension/plugins目录下,然后重启下 sonar

- 如果没有安装相关检查插件,默认是没有内容可以设置,建议现在插件市场安装 FindBugs,这样也可以帮你生成插件目录:

- 浏览器访问 Nexus:http://192.168.0.105:18081

- 默认用户名:admin

- 默认密码:admin123

- 浏览器访问 Jenkins:http://192.168.0.105:18080

- 首次进入 Jenkins 的 Web UI 界面是一个解锁页面 Unlock Jenkins,需要让你输入:Administrator password

- 这个密码放在:

/var/jenkins_home/secrets/initialAdminPassword,你需要先:docker exec -it ci_jenkins_1 /bin/bash- 然后:

cat /var/jenkins_home/secrets/initialAdminPassword,找到初始化密码

- 然后:

- 这个密码放在:

- 首次进入 Jenkins 的 Web UI 界面是一个解锁页面 Unlock Jenkins,需要让你输入:Administrator password

配置 Jenkins 拉取代码权限

- 因为 dockerfile 中我已经把宿主机的 .ssh 下的挂载在 Jenkins 的容器中

- 所以读取宿主机的 pub:

cat ~/.ssh/id_rsa.pub,然后配置在 Gitlab 中:http://192.168.0.105:10080/profile/keys - Jenkinsfile 中 Git URL 使用:ssh 协议,比如:

ssh://git@192.168.0.105:10022/gitnavi/spring-boot-ci-demo.git

Jenkins 特殊配置(减少权限问题,如果是内网的话)

- 访问:http://192.168.0.105:18080/configureSecurity/

- 去掉

防止跨站点请求伪造 - 勾选

登录用户可以做任何事下面的:Allow anonymous read access

- 去掉

配置 Gitlab Webhook

- Jenkins 访问:http://192.168.0.105:18080/job/任务名/configure

- 在

Build Triggers勾选:触发远程构建 (例如,使用脚本),在身份验证令牌输入框填写任意字符串,这个等下 Gitlab 会用到,假设我这里填写:112233

- 在

- Gitlab 访问:http://192.168.0.105:10080/用户名/项目名/settings/integrations

- 在

URL中填写:http://192.168.0.105:18080/job/任务名/build?token=112233

- 在

资料

LDAP 安装和配置

LDAP 基本概念

- https://segmentfault.com/a/1190000002607140

- http://www.itdadao.com/articles/c15a1348510p0.html

- http://blog.csdn.net/reblue520/article/details/51804162

LDAP 服务器端安装

- 环境:CentOS 7.3 x64(为了方便,已经禁用了防火墙)

- 常见服务端:

- 这里选择:OpenLDAP,安装(最新的是 2.4.40):

yum install -y openldap openldap-clients openldap-servers migrationtools - 配置:

cp /usr/share/openldap-servers/DB_CONFIG.example /var/lib/ldap/DB_CONFIGchown ldap. /var/lib/ldap/DB_CONFIG

- 启动:

systemctl start slapdsystemctl enable slapd

- 查看占用端口(默认用的是 389):

netstat -tlnp | grep slapd

设置OpenLDAP管理员密码

- 输入命令:

slappasswd,重复输入两次明文密码后(我是:123456),我得到一个加密后密码(后面会用到):{SSHA}YK8qBtlmEpjUiVEPyfmNNDALjBaUTasc - 新建临时配置目录:

mkdir /root/my_ldif ; cd /root/my_ldifvim chrootpw.ldif,添加如下内容:

dn: olcDatabase={0}config,cn=config

changetype: modify

add: olcRootPW

olcRootPW: {SSHA}YK8qBtlmEpjUiVEPyfmNNDALjBaUTasc

- 添加刚刚写的配置(过程比较慢):

ldapadd -Y EXTERNAL -H ldapi:/// -f chrootpw.ldif - 导入默认的基础配置(过程比较慢):

for i in /etc/openldap/schema/*.ldif; do ldapadd -Y EXTERNAL -H ldapi:/// -f $i; done

修改默认的domain

- 输入命令:

slappasswd,重复输入两次明文密码后(我是:111111),我得到一个加密后密码(后面会用到):{SSHA}rNLkIMYKvYhbBjxLzSbjVsJnZSkrfC3w cd /root/my_ldif ; vim chdomain.ldif,添加如下内容(cn,dc,dc,olcRootPW 几个值需要你自己改):

dn: olcDatabase={1}monitor,cn=config

changetype: modify

replace: olcAccess

olcAccess: {0}to * by dn.base="gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth"

read by dn.base="cn=gitnavi,dc=youmeek,dc=com" read by * none

dn: olcDatabase={2}hdb,cn=config

changetype: modify

replace: olcSuffix

olcSuffix: dc=youmeek,dc=com

dn: olcDatabase={2}hdb,cn=config

changetype: modify

replace: olcRootDN

olcRootDN: cn=gitnavi,dc=youmeek,dc=com

dn: olcDatabase={2}hdb,cn=config

changetype: modify

add: olcRootPW

olcRootPW: {SSHA}rNLkIMYKvYhbBjxLzSbjVsJnZSkrfC3w

dn: olcDatabase={2}hdb,cn=config

changetype: modify

add: olcAccess

olcAccess: {0}to attrs=userPassword,shadowLastChange by

dn="cn=gitnavi,dc=youmeek,dc=com" write by anonymous auth by self write by * none

olcAccess: {1}to dn.base="" by * read

olcAccess: {2}to * by dn="cn=gitnavi,dc=youmeek,dc=com" write by * read

- 添加配置:

ldapadd -Y EXTERNAL -H ldapi:/// -f chdomain.ldif

添加一个基本的目录

cd /root/my_ldif ; vim basedomain.ldif,添加如下内容(cn,dc,dc 几个值需要你自己改):

dn: dc=youmeek,dc=com

objectClass: top

objectClass: dcObject

objectclass: organization

o: youmeek dot Com

dc: youmeek

dn: cn=gitnavi,dc=youmeek,dc=com

objectClass: organizationalRole

cn: gitnavi

description: Directory Manager

dn: ou=People,dc=youmeek,dc=com

objectClass: organizationalUnit

ou: People

dn: ou=Group,dc=youmeek,dc=com

objectClass: organizationalUnit

ou: Group

- 添加配置:

ldapadd -x -D cn=gitnavi,dc=youmeek,dc=com -W -f basedomain.ldif,会提示让你输入配置 domain 的密码,我是:111111 简单的配置到此就好了

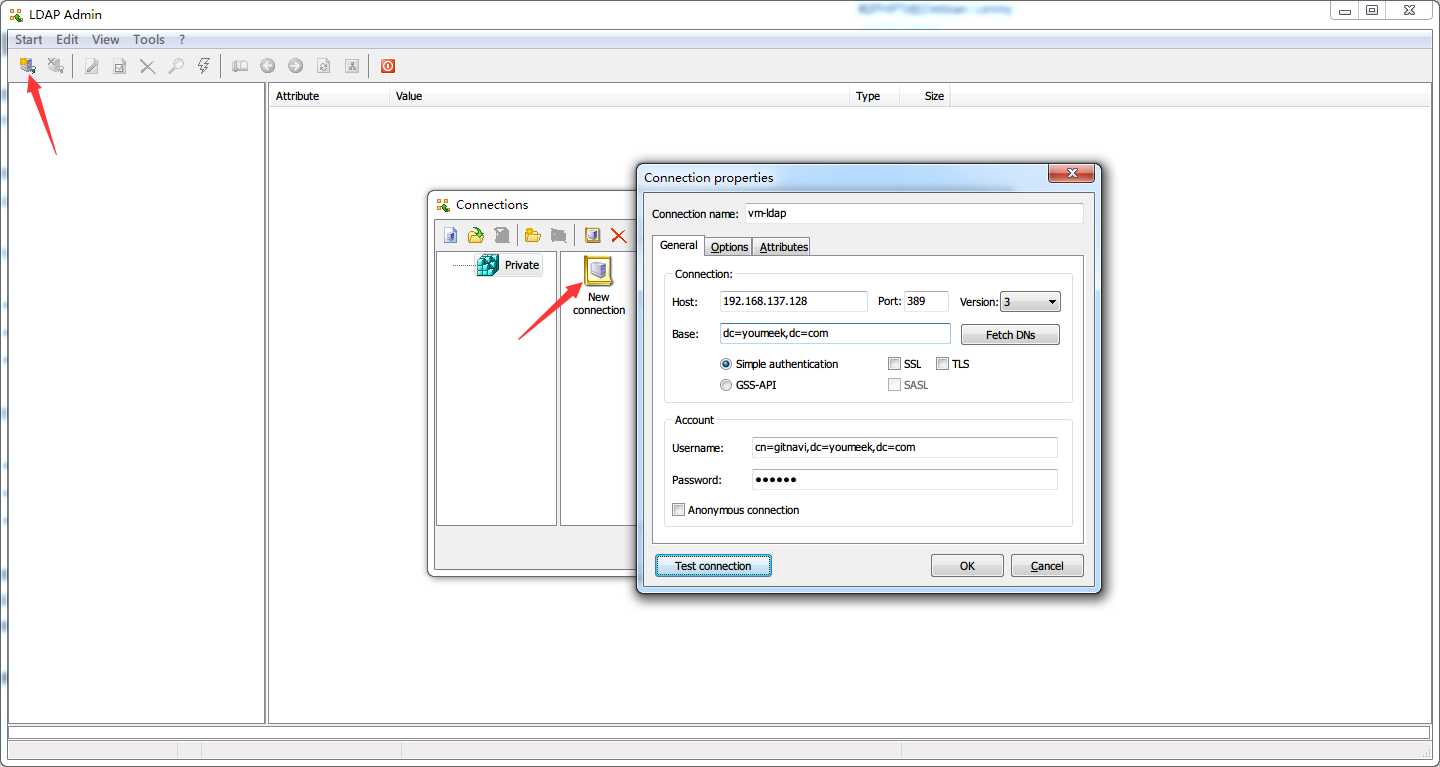

测试连接

- 重启下服务:

systemctl restart slapd - 本机测试,输入命令:

ldapsearch -LLL -W -x -D "cn=gitnavi,dc=youmeek,dc=com" -H ldap://localhost -b "dc=youmeek,dc=com",输入 domain 密码,可以查询到相应信息 - 局域网客户端连接测试,下载 Ldap Admin(下载地址看文章下面),具体连接信息看下图:

LDAP 客户端

资料

- https://superlc320.gitbooks.io/samba-ldap-centos7/ldap_+_centos_7.html

- http://yhz61010.iteye.com/blog/2352672

- https://kyligence.gitbooks.io/kap-manual/zh-cn/security/ldap.cn.html

- http://gaowenlong.blog.51cto.com/451336/1887408

Kubernets(K8S) 使用

环境说明

- CentOS 7.7(不准确地说:要求必须是 CentOS 7 64位)

- Docker

Kubernetes

- 目前流行的容器编排系统

- 简称:K8S

- 官网:https://kubernetes.io/

- 主要解决几个问题:

调度生命周期及健康状况服务发现监控认证容器聚合

- 主要角色:Master、Node

安装准备 - Kubernetes 1.13 版本

- 推荐最低 2C2G,优先:2C4G 或以上

- 特别说明:1.13 之前的版本,由于网络问题,需要各种设置,这里就不再多说了。1.13 之后相对就简单了点。

- 优先官网软件包:kubeadm

- 官网资料:

- issues 入口:https://github.com/kubernetes/kubeadm

- 源码入口:https://github.com/kubernetes/kubernetes/tree/master/cmd/kubeadm

- 安装指导:https://kubernetes.io/docs/setup/independent/install-kubeadm/

- 按官网要求做下检查:https://kubernetes.io/docs/setup/independent/install-kubeadm/#before-you-begin

- 网络环境:https://kubernetes.io/docs/setup/independent/install-kubeadm/#verify-the-mac-address-and-product-uuid-are-unique-for-every-node

- 端口检查:https://kubernetes.io/docs/setup/independent/install-kubeadm/#check-required-ports

- 对 Docker 版本的支持,这里官网推荐的是 18.06:https://kubernetes.io/docs/setup/release/notes/#sig-cluster-lifecycle

- 三大核心工具包,都需要各自安装,并且注意版本关系:

kubeadm: the command to bootstrap the cluster.- 集群部署、管理工具

kubelet: the component that runs on all of the machines in your cluster and does things like starting pods and containers.- 具体执行层面的管理 Pod 和 Docker 工具

kubectl: the command line util to talk to your cluster.- 操作 k8s 的命令行入口工具

- 官网插件安装过程的故障排查:https://kubernetes.io/docs/setup/independent/troubleshooting-kubeadm/

- 其他部署方案:

开始安装 - Kubernetes 1.13.3 版本

- 三台机子:

- master-1:

192.168.0.127 - node-1:

192.168.0.128 - node-2:

192.168.0.129

- master-1:

- 官网最新版本:https://github.com/kubernetes/kubernetes/releases

- 官网 1.13 版本的 changelog:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.13.md

- 所有节点安装 Docker 18.06,并设置阿里云源

- 可以参考:点击我o(∩_∩)o

- 核心,查看可以安装的 Docker 列表:

yum list docker-ce --showduplicates

- 所有节点设置 kubernetes repo 源,并安装 Kubeadm、Kubelet、Kubectl 都设置阿里云的源

- Kubeadm 初始化集群过程当中,它会下载很多的镜像,默认也是去 Google 家里下载。但是 1.13 新增了一个配置:

--image-repository算是救了命。

安装具体流程

- 同步所有机子时间:

systemctl start chronyd.service && systemctl enable chronyd.service - 所有机子禁用防火墙、selinux、swap

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl disable iptables.service

iptables -P FORWARD ACCEPT

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo "vm.swappiness = 0" >> /etc/sysctl.conf

swapoff -a && sysctl -w vm.swappiness=0

- 给各自机子设置 hostname 和 hosts

hostnamectl --static set-hostname k8s-master-1

hostnamectl --static set-hostname k8s-node-1

hostnamectl --static set-hostname k8s-node-2

vim /etc/hosts

192.168.0.127 k8s-master-1

192.168.0.128 k8s-node-1

192.168.0.129 k8s-node-2

- 给 master 设置免密

ssh-keygen -t rsa

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

ssh localhost

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 root@k8s-node-1(根据提示输入 k8s-node-1 密码)

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 root@k8s-node-2(根据提示输入 k8s-node-2 密码)

ssh k8s-master-1

ssh k8s-node-1

ssh k8s-node-2

- 给所有机子设置 yum 源

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

scp -r /etc/yum.repos.d/kubernetes.repo root@k8s-node-1:/etc/yum.repos.d/

scp -r /etc/yum.repos.d/kubernetes.repo root@k8s-node-2:/etc/yum.repos.d/

- 给 master 机子创建 flannel 配置文件

mkdir -p /etc/cni/net.d && vim /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

- 给所有机子创建配置

vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.swappiness=0

scp -r /etc/sysctl.d/k8s.conf root@k8s-node-1:/etc/sysctl.d/

scp -r /etc/sysctl.d/k8s.conf root@k8s-node-2:/etc/sysctl.d/

modprobe br_netfilter && sysctl -p /etc/sysctl.d/k8s.conf

- 给所有机子安装组件

yum install -y kubelet-1.13.3 kubeadm-1.13.3 kubectl-1.13.3 --disableexcludes=kubernetes

- 给所有机子添加一个变量

vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

最后一行添加:Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

- 启动所有机子

systemctl enable kubelet && systemctl start kubelet

kubeadm version

kubectl version

- 初始化 master 节点:

echo 1 > /proc/sys/net/ipv4/ip_forward

kubeadm init \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--pod-network-cidr 10.244.0.0/16 \

--kubernetes-version 1.13.3 \

--ignore-preflight-errors=Swap

其中 10.244.0.0/16 是 flannel 插件固定使用的ip段,它的值取决于你准备安装哪个网络插件

这个过程会下载一些 docker 镜像,时间可能会比较久,看你网络情况。

终端会输出核心内容:

[init] Using Kubernetes version: v1.13.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master-1 localhost] and IPs [192.168.0.127 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master-1 localhost] and IPs [192.168.0.127 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master-1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.0.127]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 19.001686 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master-1" as an annotation

[mark-control-plane] Marking the node k8s-master-1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 8tpo9l.jlw135r8559kaad4

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.0.127:6443 --token 8tpo9l.jlw135r8559kaad4 --discovery-token-ca-cert-hash sha256:d6594ccc1310a45cbebc45f1c93f5ac113873786365ed63efcf667c952d7d197

- 给 master 机子设置配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=$HOME/.kube/config

- 在 master 上查看一些环境

kubeadm token list

kubectl cluster-info

- 给 master 安装 Flannel

cd /opt && wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f /opt/kube-flannel.yml

- 到 node 节点加入集群:

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

kubeadm join 192.168.0.127:6443 --token 8tpo9l.jlw135r8559kaad4 --discovery-token-ca-cert-hash sha256:d6594ccc1310a45cbebc45f1c93f5ac113873786365ed63efcf667c952d7d197

这时候终端会输出:

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "192.168.0.127:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.0.127:6443"

[discovery] Requesting info from "https://192.168.0.127:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.0.127:6443"

[discovery] Successfully established connection with API Server "192.168.0.127:6443"

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node-1" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

- 如果 node 节点加入失败,可以:

kubeadm reset,再来重新 join - 在 master 节点上:

kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

结果都是 Healthy 则表示可以了,不然就得检查。必要时可以用:`kubeadm reset` 重置,重新进行集群初始化

- 在 master 节点上:

kubectl get nodes

如果还是 NotReady,则查看错误信息:kubectl get pods --all-namespaces

其中:Pending/ContainerCreating/ImagePullBackOff 都是 Pod 没有就绪,我们可以这样查看对应 Pod 遇到了什么问题

kubectl describe pod <Pod Name> --namespace=kube-system

或者:kubectl logs <Pod Name> -n kube-system

tail -f /var/log/messages

主要概念

-

Master 节点,负责集群的调度、集群的管理

- 常见组件:https://kubernetes.io/docs/concepts/overview/components/

- kube-apiserver:API服务

- kube-scheduler:调度

- Kube-Controller-Manager:容器编排

- Etcd:保存了整个集群的状态

- Kube-proxy:负责为 Service 提供 cluster 内部的服务发现和负载均衡

- Kube-DNS:负责为整个集群提供 DNS 服务

-

node 节点,负责容器相关的处理

-

Pods

创建,调度以及管理的最小单元

共存的一组容器的集合

容器共享PID,网络,IPC以及UTS命名空间

容器共享存储卷

短暂存在

Volumes

数据持久化

Pod中容器共享数据

生命周期

支持多种类型的数据卷 – emptyDir, hostpath, gcePersistentDisk, awsElasticBlockStore, nfs, iscsi, glusterfs, secrets

Labels

用以标示对象(如Pod)的key/value对

组织并选择对象子集

Replication Controllers

确保在任一时刻运行指定数目的Pod

容器重新调度

规模调整

在线升级

多发布版本跟踪

Services

抽象一系列Pod并定义其访问规则

固定IP地址和DNS域名

通过环境变量和DNS发现服务

负载均衡

外部服务 – ClusterIP, NodePort, LoadBalancer

主要组成模块

etcd

高可用的Key/Value存储

只有apiserver有读写权限

使用etcd集群确保数据可靠性

apiserver

Kubernetes系统入口, REST

认证

授权

访问控制

服务帐号

资源限制

kube-scheduler

资源需求

服务需求

硬件/软件/策略限制

关联性和非关联性

数据本地化

kube-controller-manager

Replication controller

Endpoint controller

Namespace controller

Serviceaccount controller

kubelet

节点管理器

确保调度到本节点的Pod的运行和健康

kube-proxy

Pod网络代理

TCP/UDP请求转发

负载均衡(Round Robin)

服务发现

环境变量

DNS – kube2sky, etcd,skydns

网络

容器间互相通信

节点和容器间互相通信

每个Pod使用一个全局唯一的IP

高可用

kubelet保证每一个master节点的服务正常运行

系统监控程序确保kubelet正常运行

Etcd集群

多个apiserver进行负载均衡

Master选举确保kube-scheduler和kube-controller-manager高可用

资料

Hadoop 安装和配置

Hadoop 说明

- Hadoop 官网:https://hadoop.apache.org/

- Hadoop 官网下载:https://hadoop.apache.org/releases.html

基础环境

- 学习机器 2C4G(生产最少 8G):

- 172.16.0.17

- 172.16.0.43

- 172.16.0.180

- 操作系统:CentOS 7.5

- root 用户

- 所有机子必备:Java:1.8

- 确保:

echo $JAVA_HOME能查看到路径,并记下来路径

- 确保:

- Hadoop:2.6.5

- 关闭所有机子的防火墙:

systemctl stop firewalld.service

集群环境设置

- Hadoop 集群具体来说包含两个集群:HDFS 集群和 YARN 集群,两者逻辑上分离,但物理上常在一起

- HDFS 集群:负责海量数据的存储,集群中的角色主要有 NameNode / DataNode

- YARN 集群:负责海量数据运算时的资源调度,集群中的角色主要有 ResourceManager /NodeManager

- HDFS 采用 master/worker 架构。一个 HDFS 集群是由一个 Namenode 和一定数目的 Datanodes 组成。Namenode 是一个中心服务器,负责管理文件系统的命名空间 (namespace) 以及客户端对文件的访问。集群中的 Datanode 一般是一个节点一个,负责管理它所在节点上的存储。

- 分别给三台机子设置 hostname

hostnamectl --static set-hostname linux01

hostnamectl --static set-hostname linux02

hostnamectl --static set-hostname linux03

- 修改 hosts

就按这个来,其他多余的别加,不然可能也会有影响

vim /etc/hosts

172.16.0.17 linux01

172.16.0.43 linux02

172.16.0.180 linux03

- 对 linux01 设置免密:

生产密钥对

ssh-keygen -t rsa

公钥内容写入 authorized_keys

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

测试:

ssh localhost

- 将公钥复制到两台 slave

- 如果你是采用 pem 登录的,可以看这个:SSH 免密登录

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 root@172.16.0.43,根据提示输入 linux02 机器的 root 密码,成功会有相应提示

ssh-copy-id -i ~/.ssh/id_rsa.pub -p 22 root@172.16.0.180,根据提示输入 linux03 机器的 root 密码,成功会有相应提示

在 linux01 上测试:

ssh linux02

ssh linux03

Hadoop 安装

- 关于版本这件事,主要看你的技术生态圈。如果你的其他技术,比如 Spark,Flink 等不支持最新版,则就只能向下考虑。

- 我这里技术栈,目前只能到:2.6.5,所以下面的内容都是基于 2.6.5 版本

- 官网说明:https://hadoop.apache.org/docs/r2.6.5/hadoop-project-dist/hadoop-common/ClusterSetup.html

- 分别在三台机子上都创建目录:

mkdir -p /data/hadoop/hdfs/name /data/hadoop/hdfs/data /data/hadoop/hdfs/tmp

- 下载 Hadoop:http://apache.claz.org/hadoop/common/hadoop-2.6.5/

- 现在 linux01 机子上安装

cd /usr/local && wget http://apache.claz.org/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

tar zxvf hadoop-2.6.5.tar.gz,有 191M 左右

- 给三台机子都先设置 HADOOP_HOME

- 会 ansible playbook 会方便点:Ansible 安装和配置

vim /etc/profile

export HADOOP_HOME=/usr/local/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

修改 linux01 配置

修改 JAVA_HOME

vim $HADOOP_HOME/etc/hadoop/hadoop-env.sh

把 25 行的

export JAVA_HOME=${JAVA_HOME}

都改为

export JAVA_HOME=/usr/local/jdk1.8.0_191

vim $HADOOP_HOME/etc/hadoop/yarn-env.sh

文件开头加一行 export JAVA_HOME=/usr/local/jdk1.8.0_191

- hadoop.tmp.dir == 指定hadoop运行时产生文件的存储目录

vim $HADOOP_HOME/etc/hadoop/core-site.xml,改为:

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/data/hadoop/hdfs/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!--

<property>

<name>fs.default.name</name>

<value>hdfs://linux01:9000</value>

</property>

-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://linux01:9000</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

- 配置包括副本数量

- 最大值是 datanode 的个数

- 数据存放目录

vim $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/data/hadoop/hdfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/data/hadoop/hdfs/data</value>

<final>true</final>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

- 设置 YARN

新创建:vim $HADOOP_HOME/etc/hadoop/mapred-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>4096</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>8192</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx3072m</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx6144m</value>

</property>

</configuration>

- yarn.resourcemanager.hostname == 指定YARN的老大(ResourceManager)的地址

- yarn.nodemanager.aux-services == NodeManager上运行的附属服务。需配置成mapreduce_shuffle,才可运行MapReduce程序默认值:""

- 32G 内存的情况下配置:

vim $HADOOP_HOME/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>linux01</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>20480</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

</configuration>

- 配置 slave 相关信息

vim $HADOOP_HOME/etc/hadoop/slaves

把默认的配置里面的 localhost 删除,换成:

linux02

linux03

scp -r /usr/local/hadoop-2.6.5 root@linux02:/usr/local/

scp -r /usr/local/hadoop-2.6.5 root@linux03:/usr/local/

linux01 机子运行

格式化 HDFS

hdfs namenode -format

- 输出结果:

[root@linux01 hadoop-2.6.5]# hdfs namenode -format

18/12/17 17:47:17 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.6.5

STARTUP_MSG: classpath = /usr/local/hadoop-2.6.5/etc/hadoop:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/hadoop-auth-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/hadoop-annotations-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/hadoop-common-2.6.5-tests.jar:/usr/local/hadoop-2.6.5/share/hadoop/common/hadoop-nfs-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5-tests.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/hdfs/hadoop-hdfs-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-api-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-common-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-registry-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-client-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-common-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar:/usr/local/hadoop-2.6.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.5-tests.jar:/usr/local/hadoop-2.6.5/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r e8c9fe0b4c252caf2ebf1464220599650f119997; compiled by 'sjlee' on 2016-10-02T23:43Z

STARTUP_MSG: java = 1.8.0_191

************************************************************/

18/12/17 17:47:17 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/12/17 17:47:17 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-beba43b4-0881-48b4-8eda-5c3bca046398

18/12/17 17:47:17 INFO namenode.FSNamesystem: No KeyProvider found.

18/12/17 17:47:17 INFO namenode.FSNamesystem: fsLock is fair:true

18/12/17 17:47:17 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

18/12/17 17:47:17 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

18/12/17 17:47:17 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

18/12/17 17:47:17 INFO blockmanagement.BlockManager: The block deletion will start around 2018 Dec 17 17:47:17

18/12/17 17:47:17 INFO util.GSet: Computing capacity for map BlocksMap

18/12/17 17:47:17 INFO util.GSet: VM type = 64-bit

18/12/17 17:47:17 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

18/12/17 17:47:17 INFO util.GSet: capacity = 2^21 = 2097152 entries

18/12/17 17:47:17 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

18/12/17 17:47:17 INFO blockmanagement.BlockManager: defaultReplication = 2

18/12/17 17:47:17 INFO blockmanagement.BlockManager: maxReplication = 512

18/12/17 17:47:17 INFO blockmanagement.BlockManager: minReplication = 1

18/12/17 17:47:17 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

18/12/17 17:47:17 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

18/12/17 17:47:17 INFO blockmanagement.BlockManager: encryptDataTransfer = false

18/12/17 17:47:17 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

18/12/17 17:47:17 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

18/12/17 17:47:17 INFO namenode.FSNamesystem: supergroup = supergroup

18/12/17 17:47:17 INFO namenode.FSNamesystem: isPermissionEnabled = false

18/12/17 17:47:17 INFO namenode.FSNamesystem: HA Enabled: false

18/12/17 17:47:17 INFO namenode.FSNamesystem: Append Enabled: true

18/12/17 17:47:17 INFO util.GSet: Computing capacity for map INodeMap

18/12/17 17:47:17 INFO util.GSet: VM type = 64-bit

18/12/17 17:47:17 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

18/12/17 17:47:17 INFO util.GSet: capacity = 2^20 = 1048576 entries

18/12/17 17:47:17 INFO namenode.NameNode: Caching file names occuring more than 10 times

18/12/17 17:47:17 INFO util.GSet: Computing capacity for map cachedBlocks

18/12/17 17:47:17 INFO util.GSet: VM type = 64-bit

18/12/17 17:47:17 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

18/12/17 17:47:17 INFO util.GSet: capacity = 2^18 = 262144 entries

18/12/17 17:47:17 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

18/12/17 17:47:17 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

18/12/17 17:47:17 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

18/12/17 17:47:17 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/12/17 17:47:17 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/12/17 17:47:17 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/12/17 17:47:17 INFO util.GSet: VM type = 64-bit

18/12/17 17:47:17 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

18/12/17 17:47:17 INFO util.GSet: capacity = 2^15 = 32768 entries

18/12/17 17:47:17 INFO namenode.NNConf: ACLs enabled? false

18/12/17 17:47:17 INFO namenode.NNConf: XAttrs enabled? true

18/12/17 17:47:17 INFO namenode.NNConf: Maximum size of an xattr: 16384

18/12/17 17:47:17 INFO namenode.FSImage: Allocated new BlockPoolId: BP-233285725-127.0.0.1-1545040037972

18/12/17 17:47:18 INFO common.Storage: Storage directory /data/hadoop/hdfs/name has been successfully formatted.

18/12/17 17:47:18 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

18/12/17 17:47:18 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 of size 321 bytes saved in 0 seconds.

18/12/17 17:47:18 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/12/17 17:47:18 INFO util.ExitUtil: Exiting with status 0

18/12/17 17:47:18 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

HDFS 启动

- 启动:start-dfs.sh,根据提示一路 yes

这个命令效果:

主节点会启动任务:NameNode 和 SecondaryNameNode

从节点会启动任务:DataNode

主节点查看:jps,可以看到:

21922 Jps

21603 NameNode

21787 SecondaryNameNode

从节点查看:jps 可以看到:

19728 DataNode

19819 Jps

- 查看运行更多情况:

hdfs dfsadmin -report

Configured Capacity: 0 (0 B)

Present Capacity: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used: 0 (0 B)

DFS Used%: NaN%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

- 如果需要停止:

stop-dfs.sh - 查看 log:

cd $HADOOP_HOME/logs

YARN 运行

start-yarn.sh

然后 jps 你会看到一个:ResourceManager

从节点你会看到:NodeManager

停止:stop-yarn.sh

端口情况

- 主节点当前运行的所有端口:

netstat -tpnl | grep java - 会用到端口(为了方便展示,整理下顺序):

tcp 0 0 172.16.0.17:9000 0.0.0.0:* LISTEN 22932/java >> NameNode

tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 22932/java >> NameNode

tcp 0 0 0.0.0.0:50090 0.0.0.0:* LISTEN 23125/java >> SecondaryNameNode

tcp6 0 0 172.16.0.17:8030 :::* LISTEN 23462/java >> ResourceManager

tcp6 0 0 172.16.0.17:8031 :::* LISTEN 23462/java >> ResourceManager

tcp6 0 0 172.16.0.17:8032 :::* LISTEN 23462/java >> ResourceManager

tcp6 0 0 172.16.0.17:8033 :::* LISTEN 23462/java >> ResourceManager

tcp6 0 0 172.16.0.17:8088 :::* LISTEN 23462/java >> ResourceManager

- 从节点当前运行的所有端口:

netstat -tpnl | grep java - 会用到端口(为了方便展示,整理下顺序):

tcp 0 0 0.0.0.0:50010 0.0.0.0:* LISTEN 14545/java >> DataNode

tcp 0 0 0.0.0.0:50020 0.0.0.0:* LISTEN 14545/java >> DataNode

tcp 0 0 0.0.0.0:50075 0.0.0.0:* LISTEN 14545/java >> DataNode

tcp6 0 0 :::8040 :::* LISTEN 14698/java >> NodeManager

tcp6 0 0 :::8042 :::* LISTEN 14698/java >> NodeManager

tcp6 0 0 :::13562 :::* LISTEN 14698/java >> NodeManager

tcp6 0 0 :::37481 :::* LISTEN 14698/java >> NodeManager

管理界面

- 查看 HDFS NameNode 管理界面:http://linux01:50070

- 访问 YARN ResourceManager 管理界面:http://linux01:8088

- 访问 NodeManager-1 管理界面:http://linux02:8042

- 访问 NodeManager-2 管理界面:http://linux03:8042

运行作业

- 在主节点上操作

- 运行一个 Mapreduce 作业试试:

- 计算 π:

hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar pi 5 10

- 计算 π:

- 运行一个文件相关作业:

- 由于运行 hadoop 时指定的输入文件只能是 HDFS 文件系统中的文件,所以我们必须将要进行 wordcount 的文件从本地文件系统拷贝到 HDFS 文件系统中。

- 查看目前根目录结构:

hadoop fs -ls /- 查看目前根目录结构,另外写法:

hadoop fs -ls hdfs://linux-05:9000/ - 或者列出目录以及下面的文件:

hadoop fs -ls -R / - 更多命令可以看:hadoop HDFS常用文件操作命令

- 查看目前根目录结构,另外写法:

- 创建目录:

hadoop fs -mkdir -p /tmp/zch/wordcount_input_dir - 上传文件:

hadoop fs -put /opt/input.txt /tmp/zch/wordcount_input_dir - 查看上传的目录下是否有文件:

hadoop fs -ls /tmp/zch/wordcount_input_dir - 向 yarn 提交作业,计算单词个数:

hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.5.jar wordcount /tmp/zch/wordcount_input_dir /tmp/zch/wordcount_output_dir - 查看计算结果输出的目录:

hadoop fs -ls /tmp/zch/wordcount_output_dir - 查看计算结果输出内容:

hadoop fs -cat /tmp/zch/wordcount_output_dir/part-r-00000

- 查看正在运行的 Hadoop 任务:

yarn application -list - 关闭 Hadoop 任务进程:

yarn application -kill 你的ApplicationId

资料

- 如何正确的为 MapReduce 配置内存分配

- https://www.linode.com/docs/databases/hadoop/how-to-install-and-set-up-hadoop-cluster/

- http://www.cnblogs.com/Leo_wl/p/7426496.html

- https://blog.csdn.net/bingduanlbd/article/details/51892750

- https://blog.csdn.net/whdxjbw/article/details/81050597

Gitlab 安装和配置

Docker Compose 安装方式

- 创建宿主机挂载目录:

mkdir -p /data/docker/gitlab/gitlab /data/docker/gitlab/redis /data/docker/gitlab/postgresql - 赋权(避免挂载的时候,一些程序需要容器中的用户的特定权限使用):

chown -R 777 /data/docker/gitlab/gitlab /data/docker/gitlab/redis /data/docker/gitlab/postgresql - 这里使用 docker-compose 的启动方式,所以需要创建 docker-compose.yml 文件:

gitlab:

image: sameersbn/gitlab:10.4.2-1

ports:

- "10022:22"

- "10080:80"

links:

- gitlab-redis:redisio

- gitlab-postgresql:postgresql

environment:

- GITLAB_PORT=80

- GITLAB_SSH_PORT=22

- GITLAB_SECRETS_DB_KEY_BASE=long-and-random-alpha-numeric-string

- GITLAB_SECRETS_SECRET_KEY_BASE=long-and-random-alpha-numeric-string

- GITLAB_SECRETS_OTP_KEY_BASE=long-and-random-alpha-numeric-string

volumes:

- /data/docker/gitlab/gitlab:/home/git/data

restart: always

gitlab-redis:

image: sameersbn/redis

volumes:

- /data/docker/gitlab/redis:/var/lib/redis

restart: always

gitlab-postgresql:

image: sameersbn/postgresql:9.6-2

environment:

- DB_NAME=gitlabhq_production

- DB_USER=gitlab

- DB_PASS=password

- DB_EXTENSION=pg_trgm

volumes:

- /data/docker/gitlab/postgresql:/var/lib/postgresql

restart: always

- 启动:

docker-compose up -d - 浏览器访问:http://192.168.0.105:10080

Gitlab 高可用方案(High Availability)

- 官网:https://about.gitlab.com/high-availability/

- 本质就是把文件、缓存、数据库抽离出来,然后部署多个 Gitlab 用 nginx 前面做负载。

原始安装方式(推荐)

- 推荐至少内存 4G,它有大量组件

- 有开源版本和收费版本,各版本比较:https://about.gitlab.com/products/

- 官网:https://about.gitlab.com/

- 中文网:https://www.gitlab.com.cn/

- 官网下载:https://about.gitlab.com/downloads/

- 官网安装说明:https://about.gitlab.com/installation/#centos-7

- 如果上面的下载比较慢,也有国内的镜像:

- 参考:https://ken.io/note/centos7-gitlab-install-tutorial

sudo yum install -y curl policycoreutils-python openssh-server

sudo systemctl enable sshd

sudo systemctl start sshd

curl https://packages.gitlab.com/install/repositories/gitlab/gitlab-ce/script.rpm.sh | sudo bash

sudo EXTERNAL_URL="http://192.168.1.123:8181" yum install -y gitlab-ce

配置

- 配置域名 / IP

- 编辑配置文件:

sudo vim /etc/gitlab/gitlab.rb - 找到 13 行左右:

external_url 'http://gitlab.example.com',改为你的域名 / IP - 刷新配置:

sudo gitlab-ctl reconfigure,第一次这个时间会比较久,我花了好几分钟 - 启动服务:

sudo gitlab-ctl start - 停止服务:

sudo gitlab-ctl stop - 重启服务:

sudo gitlab-ctl restart

- 编辑配置文件:

- 前面的初始化配置完成之后,访问当前机子 IP:

http://192.168.1.111:80 - 默认用户是

root,并且没有密码,所以第一次访问是让你设置你的 root 密码,我设置为:gitlab123456(至少 8 位数) - 设置会初始化密码之后,你就需要登录了。输入设置的密码。

- root 管理员登录之后常用的设置地址(请求地址都是 RESTful 风格很好懂,也应该不会再变了。):

- 用户管理:http://192.168.1.111/admin/users

- 用户组管理:http://192.168.1.111/admin/groups

- 项目管理:http://192.168.1.111/admin/projects

- 添加 SSH Keys:http://192.168.1.111/profile/keys

- 给新创建的用户设置密码:http://192.168.1.111/admin/users/用户名/edit

- 新创建的用户,他首次登录会要求他强制修改密码的,这个设定很棒!

- 普通用户登录之后常去的链接:

- 配置 SSH Keys:http://192.168.1.111/profile/keys

配置 Jenkins 拉取代码权限

- Gitlab 创建一个 Access Token:http://192.168.0.105:10080/profile/personal_access_tokens

- 填写任意 Name 字符串

- 勾选:API

Access the authenticated user's API - 点击:Create personal access token,会生成一个类似格式的字符串:

wt93jQzA8yu5a6pfsk3s,这个 Jenkinsfile 会用到

- 先访问 Jenkins 插件安装页面,安装下面三个插件:http://192.168.0.105:18080/pluginManager/available

- Gitlab:可能会直接安装不成功,如果不成功根据报错的详细信息可以看到 hpi 文件的下载地址,挂代理下载下来,然后离线安装即可

- Gitlab Hook:用于触发 GitLab 的一些 WebHooks 来构建项目

- Gitlab Authentication 这个插件提供了使用GitLab进行用户认证和授权的方案

- 安装完插件后,访问 Jenkins 这个路径(Jenkins-->Credentials-->System-->Global credentials(unrestricted)-->Add Credentials)

- 该路径链接地址:http://192.168.0.105:18080/credentials/store/system/domain/_/newCredentials

- kind 下拉框选择:

GitLab API token - token 就填写我们刚刚生成的 access token

- ID 填写我们 Gitlab 账号

权限

- 官网帮助文档的权限说明:http://192.168.1.111/help/user/permissions

用户组的权限

- 用户组有这几种权限的概念:

Guest、Reporter、Developer、Master、Owner - 这个概念在设置用户组的时候会遇到,叫做:

Add user(s) to the group,比如链接:http://192.168.1.111/admin/groups/组名称

| 行为 | Guest | Reporter | Developer | Master | Owner |

|---|---|---|---|---|---|

| 浏览组 | ✓ | ✓ | ✓ | ✓ | ✓ |

| 编辑组 | ✓ | ||||

| 创建项目 | ✓ | ✓ | |||

| 管理组成员 | ✓ | ||||

| 移除组 | ✓ |

项目组的权限

- 项目组也有这几种权限的概念:

Guest、Reporter、Developer、Master、OwnerGuest:访客Reporter:报告者; 可以理解为测试员、产品经理等,一般负责提交issue等Developer:开发者; 负责开发Master:主人; 一般是组长,负责对Master分支进行维护Owner:拥有者; 一般是项目经理

- 这个概念在项目设置的时候会遇到,叫做:

Members,比如我有一个组下的项目链接:http://192.168.1.111/组名称/项目名称/settings/members

| 行为 | Guest | Reporter | Developer | Master | Owner |

|---|---|---|---|---|---|

| 创建issue | ✓ | ✓ | ✓ | ✓ | ✓ |

| 留言评论 | ✓ | ✓ | ✓ | ✓ | ✓ |

| 更新代码 | ✓ | ✓ | ✓ | ✓ | |

| 下载工程 | ✓ | ✓ | ✓ | ✓ | |

| 创建代码片段 | ✓ | ✓ | ✓ | ✓ | |

| 创建合并请求 | ✓ | ✓ | ✓ | ||

| 创建新分支 | ✓ | ✓ | ✓ | ||

| 提交代码到非保护分支 | ✓ | ✓ | ✓ | ||

| 强制提交到非保护分支 | ✓ | ✓ | ✓ | ||

| 移除非保护分支 | ✓ | ✓ | ✓ | ||

| 添加tag | ✓ | ✓ | ✓ | ||

| 创建wiki | ✓ | ✓ | ✓ | ||

| 管理issue处理者 | ✓ | ✓ | ✓ | ||

| 管理labels | ✓ | ✓ | ✓ | ||

| 创建里程碑 | ✓ | ✓ | |||

| 添加项目成员 | ✓ | ✓ | |||

| 提交保护分支 | ✓ | ✓ | |||

| 使能分支保护 | ✓ | ✓ | |||

| 修改/移除tag | ✓ | ✓ | |||

| 编辑工程 | ✓ | ✓ | |||

| 添加deploy keys | ✓ | ✓ | |||

| 配置hooks | ✓ | ✓ | |||

| 切换visibility level | ✓ | ||||

| 切换工程namespace | ✓ | ||||

| 移除工程 | ✓ | ||||

| 强制提交保护分支 | ✓ | ||||

| 移除保护分支 | ✓ |

批量从一个项目中的成员转移到另外一个项目

- 项目的设置地址:http://192.168.1.111/组名称/项目名称/settings/members

- 有一个 Import 按钮,跳转到:http://192.168.1.111/组名称/项目名称/project_members/import

限定哪些分支可以提交、可以 merge

- 也是在项目设置里面:http://192.168.1.111/组名称/项目名称/settings/repository#

- 设置 CI (持续集成) 的 key 也是在这个地址上设置。

Gitlab 的其他功能使用

创建用户

- 地址:http://119.23.252.150:10080/admin/users/

- 创建用户是没有填写密码的地方,默认是创建后会发送邮件给用户进行首次使用的密码设置。但是,有时候没必要这样,你可以创建好用户之后,编辑该用户就可以强制设置密码了(即使你设置了,第一次用户使用还是要让你修改密码...真是严苛)

创建群组

- 地址:http://119.23.252.150:10080/groups

- 群组主要有三种 Visibility Level:

- Private(私有,内部成员才能看到),The group and its projects can only be viewed by members.

- Internal(内部,只要能登录 Gitlab 就可以看到),The group and any internal projects can be viewed by any logged in user.

- Public(所有人都可以看到),The group and any public projects can be viewed without any authentication.

创建项目

增加 SSH keys

- 地址:http://119.23.252.150:10080/profile/keys

- 官网指导:http://119.23.252.150:10080/help/ssh/README

- 新增 SSH keys:

ssh-keygen -t rsa -C "gitnavi@qq.com" -b 4096 - linux 读取 SSH keys 值:

cat ~/.ssh/id_rsa.pub,复制到 gitlab 配置页面

使用 Gitlab 的一个开发流程 - Git flow

- Git flow:我是翻译成:Git 开发流程建议(不是规范,适合大点的团队),也有一个插件叫做这个,本质是用插件来帮你引导做规范的流程管理。

- 这几篇文章很好,不多说了:

- 比较起源的一个说明(英文):http://nvie.com/posts/a-successful-git-branching-model/

- Git-flow 插件也是他开发的,插件地址:https://github.com/nvie/gitflow

- Git-flow 插件的一些相关资料:

- http://www.ruanyifeng.com/blog/2015/12/git-workflow.html

- https://zhangmengpl.gitbooks.io/gitlab-guide/content/whatisgitflow.html

- http://blog.jobbole.com/76867/

- http://www.cnblogs.com/cnblogsfans/p/5075073.html

- 比较起源的一个说明(英文):http://nvie.com/posts/a-successful-git-branching-model/

接入第三方登录

-

官网文档:

-

gitlab 自己本身维护一套用户系统,第三方认证服务一套用户系统,gitlab 可以将两者关联起来,然后用户可以选择其中一种方式进行登录而已。

-

所以,gitlab 第三方认证只能用于网页登录,clone 时仍然使用用户在 gitlab 的账户密码,推荐使用 ssh-key 来操作仓库,不再使用账户密码。

-

重要参数:block_auto_created_users=true 的时候则自动注册的账户是被锁定的,需要管理员账户手动的为这些账户解锁,可以改为 false

-

编辑配置文件引入第三方:

sudo vim /etc/gitlab/gitlab.rb,在 309 行有默认的一些注释配置- 其中 oauth2_generic 模块默认是没有,需要自己 gem,其他主流的那些都自带,配置即可使用。

gitlab_rails['omniauth_enabled'] = true

gitlab_rails['omniauth_allow_single_sign_on'] = ['google_oauth2', 'facebook', 'twitter', 'oauth2_generic']

gitlab_rails['omniauth_block_auto_created_users'] = false

gitlab_rails['omniauth_sync_profile_attributes'] = ['email','username']

gitlab_rails['omniauth_external_providers'] = ['google_oauth2', 'facebook', 'twitter', 'oauth2_generic']

gitlab_rails['omniauth_providers'] = [

{

"name"=> "google_oauth2",

"label"=> "Google",

"app_id"=> "123456",

"app_secret"=> "123456",

"args"=> {

"access_type"=> 'offline',

"approval_prompt"=> '123456'

}

},

{

"name"=> "facebook",

"label"=> "facebook",

"app_id"=> "123456",

"app_secret"=> "123456"

},

{

"name"=> "twitter",

"label"=> "twitter",

"app_id"=> "123456",

"app_secret"=> "123456"

},

{

"name" => "oauth2_generic",

"app_id" => "123456",

"app_secret" => "123456",

"args" => {

client_options: {

"site" => "http://sso.cdk8s.com:9090/sso",

"user_info_url" => "/oauth/userinfo"

},

user_response_structure: {

root_path: ["user_attribute"],

attributes: {

"nickname": "username"

}

}

}

}

]

资料

- http://blog.smallmuou.xyz/git/2016/03/11/关于Gitlab若干权限问题.html

- https://zhangmengpl.gitbooks.io/gitlab-guide/content/whatisgitflow.html

- https://blog.coderstory.cn/2017/02/01/gitlab/

- https://xuanwo.org/2016/04/13/gitlab-install-intro/

- https://softlns.github.io/2016/11/14/jenkins-gitlab-docker/

Rinetd:一个用户端口重定向工具

Rinetd:一个用户端口重定向工具

概述

Rinetd 是一个 TCP 连接重定向工具,当我们需要进行流量的代理转发的时候,我们可以通过该工具完成。Rinetd 是一个使用异步 IO 的单进程的服务,它可以处理配置在 /etc/rinetd.conf 中的任意数量的连接,但是并不会过多的消耗服务器的资源。Rinetd 不能用于重定向 FTP 服务,因为 FTP 服务使用了多个 socket 进行通讯。

如果需要 Rinetd 随系统启动一起启动,可以在 /etc/rc.local 中添加启动命令 /usr/sbin/rinetd。在没有使用 -c 选项指定其他配置文件的情况下,Rinetd 使用默认的配置文件 /etc/rinetd.conf。

快速使用

git clone https://github.com/boutell/rinetd.git

cd rinetd

make && make install

echo "0.0.0.0 8080 www.baidu.com 80" > /etc/rinetd.conf

rinetd -c /etc/rinetd.conf

这里所有对 Rinetd 宿主机 8080 端口的请求都会被转发到 Baidu 上,注意: 保指定的端口没有被其他应用占用,如上面的 8080 端口。使用 kill -1 信号可以使 Rinetd 重新加载配置信息而不中断现有的连接。

转发规则

Rinetd 的大部分配置都是配置转发规则,规则如下:

bindaddress bindport connectaddress connectport

源地址 源端口 目的地址 目的端口

这里的地址可以是主机名也可以是 IP 地址。

如要将对 192.1.1.10:8080 的请求代理到 http://www.example.com:80 上,参照上面的规则配置如下:

www.example.com 80 192.1.1.10:8080

这里将对外的服务地址 http://www.example.com 重定向到了内部地址 192.1.1.10 的 8080 端口上。通常 192.1.1.10:8080 在防火墙的内部,外部请求并不能直接访问。如果我们源地址使用 IP 地址 0.0.0.0 ,那么代表将该服务器所有 IP 地址对应端口上的请求都代理到目的地址上。

权限控制

Rinetd 使用 allow pattern 和 deny pattern 两个关键字进行访问权限的控制。如:

allow 192.1.5.*

deny 192.1.5.1

这里 pattern 的内容必须是一个 IP 地址,不能是主机名或者域名。可以使用 ? 匹配 0 - 9 的任意一个字符,使用 * 匹配 0 -9 的任意个的字符。在转发规则之前配置的权限规则会作为一个全局生效的规则生效,在转发规则之后配置的权限规则仅适用于本条转发规则。校验的过程是先校验是否能够通过全局规则,再校验特定的规则。

日志收集

Rinetd 可以产生使用制表符分隔的日志或者 Web 服务器下的通用日志格式。默认情况下,Rinetd 不产生日志,如果需要获取日志,需要添加配置:

logfile /var/log/rinetd.log

如果需要采集 Web 通用格式的日志,需要添加配置:

gcommon